Research Article - (2025) Volume 3, Issue 2

Transforming Architectural Rendering Through Artificial Intelligence Innovations: Use of EvolveLab Plugin in Comparison to Midjourney, DALL-E, and Stable Diffusion

Received Date: Jan 17, 2025 / Accepted Date: Feb 20, 2025 / Published Date: Feb 26, 2025

Copyright: ©2025 Abdullah Saeed, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Saeed, A., Akhtar, M., Ali, M. (2025). Transforming Architectural Rendering Through Artificial Intelligence Innovations: Use of EvolveLab Plugin in Comparison to Midjourney, DALL-E, and Stable Diffusion. Eng OA, 3(2), 01-13.

Abstract

The evolution of artificial intelligence (AI) has revolutionized architectural rendering, enhancing both efficiency and creativity. This paper explores the impact of artificial intelligence (AI) innovations on architectural rendering, specifically comparing the EvolveLab plugin with Midjourney, DALL-E, and Stable Diffusion. EvolveLab, an artificial intelligence (AI) based tool, facilitates advanced rendering techniques, while Midjourney, DALL-E, and Stable Diffusion are cutting-edge generative models. This study evaluates the performance, quality, and applicability of these artificial intelligence (AI) tools in the architectural domain, providing insights into their practical implementations and benefits. By integrating artificial intelligence (AI) into architectural workflows, designers can achieve unprecedented levels of detail and realism in their renderings.

Introduction

In the dynamic world of architecture, the power of Artificial Intelligence (AI) is pushing the boundaries of creativity and efficiency, revolutionizing the process of architectural rendering. With the advent of AI technologies, architects, and designers are equipped with cutting-edge tools to create visually stunning, realistic, and innovative representations of their architectural visions. This research paper delves into the transformative influence of AI in architectural rendering, focusing on the comparative analysis of four prominent AI innovations: Midjourney, DALL-E, EvolveLab, and Stable Diffusion (Joern Ploennigs, AI Art in Architecture, 2020) [1]. Each of these innovations introduce novel methodologies that reframe the process of architectural visualization.

The realm of architectural visualization has undergone a paradigm shift with the integration of AI- powered tools, enabling architects to explore uncharted territories and streamline their design processes. Each AI innovation under scrutiny possesses distinct characteristics. Through a comprehensive evaluation of these AI technologies, we aim to provide a holistic understanding of their individual contributions and potential synergies, presenting invaluable insights into their applications in architectural rendering. In the sections to follow, we will unravel the key features and functionalities of each AI tool, dissecting their generative processes and input sources. We will explore the application of EvolveLab in architectural rendering in comparison to Midjourney, DALL-E, and Stable Diffusion, focusing on how each technology elevates the visualization of architectural designs and addresses the challenges faced by modern-day architects. Moreover, this research paper will be shedding light on the unique strengths and limitations of the AI plugins and software under discussion within the architectural context [4]. By presenting an unbiased examination of their functionalities, we aim to help architects, designers, and stakeholders make informed decisions about incorporating these AI tools into their design workflows. As we embark on this journey of discovery, it is essential to recognize that the intersection of AI and architectural rendering is a rapidly evolving landscape. The AI innovations we discuss herein have the potential to reshape architectural practice, ushering in a new era of innovation, efficiency, and artistic expression. However, they also pose challenges and ethical considerations that warrant exploration

In conclusion, this research paper endeavors to paint a holistic picture of AI's transformative role in architectural rendering, exploring the contributions of Midjourney, DALL-E, EvolveLab, and Stable Diffusion to the ever-evolving architecture industry. By harnessing the capabilities of these AI tools, architects can unleash their creative potential, elevate design realism, optimize architectural solutions, and create spaces that transcend imagination. Through a deeper understanding of AI in architectural rendering, we aim to contribute to the growing body of knowledge that will shape the future of architectural practice, enriching the built environment for generations to come [6].

Contextual Information

The field of architecture stands at the cusp of a transformative era, where integrating the innovations of Artificial Intelligence (AI) is reshaping the very foundation of architectural rendering. Over the years, architects and designers have relied on traditional methods to visualize their ideas, often limited by manual processes that entail substantial time and effort [3]. However, the advent of AI technologies has revolutionized this landscape, offering a new paradigm that not only accelerates the design process but also elevates creativity, realism, and efficiency in architectural visualization. Some key aspects that assisted in achieving the goals of photorealism are:

Architectural Rendering: Bridging Imagination and Reality Architectural rendering, the art of creating visual representations of architectural designs, serves as a crucial bridge between conceptual ideas and tangible structures. The demand for architectural visualization that is not only visually captivating but also conveys accurate and immersive experiences has fueled the exploration of AI-powered tools that leverage deep learning, generative algorithms, and advanced simulations.

The Convergence of AI and Architectural Rendering The convergence of AI and architectural rendering presents a multifaceted potential that ranges from achieving photorealistic representations to generating imaginative design concepts, optimizing spatial arrangements, and streamlining material selection. AI tools have emerged as pivotal instruments that allow architects to experiment with diverse design alternatives, swiftly iterate through iterations, and make data-driven decisions that align with project objectives (Dollens, 2023).

Understanding Generative Processes and Practical Applications

Within this dynamic context, it becomes imperative to examine the underpinning generative processes of each AI tool and their practical applications in architectural rendering. By analyzing their capabilities, strengths, and limitations, architects can make informed decisions about integrating these AI technologies into their design workflows, thereby harnessing their potential to enrich the architectural practice (Dollens, 2023).

Navigating Ethical Considerations in AI-Driven Architectural Rendering

Moreover, the rapid evolution of AI in architectural rendering is accompanied by ethical considerations that warrant careful examination. As architects embrace AI-powered tools, it is vital to ensure that the integration aligns with ethical principles, respects human agency, addresses biases, and maintains a focus on sustainability and inclusivity (Dollens, 2023). This research aims to shed light on these ethical dimensions and offer insights into responsible AI utilization in architectural rendering.

Emerging Possibilities and Complexities

As we delve into this exploration, we are confronted with a landscape that is both exciting and complex. The confluence of AI's computational prowess and architectural creativity has the potential to redefine not only the visual representation of spaces but also the very essence of design thinking. As architects and designers venture into this uncharted territory, guided by the possibilities and mindful of the challenges, the future of architectural rendering emerges as a canvas that blends human ingenuity with artificial intelligence, promising a new era of innovation, efficiency, and design excellence (Dollens, 2023)

Literature Review

The integration of artificial intelligence (AI) in architecture has revolutionized the design process, particularly with the advent of AI-generated renders. This section explores five key articles that delve into the potential and applications of AI-generated renders in architectural design, emphasizing their role in shaping the future of architecture. Joern Ploennigs, for instance, in his article titled “AI Art in Architecture” had discussed how the diffusionbased AI art platforms, including Midjourney, DALL-E 2, and Stable Diffusion, had emerged as powerful tools for conceptual designing during architectural ideation, sketching, and modeling. He also investigated the applicability of these platforms during the design process of architectural projects, showcasing how AI-generated renders efficiently address design challenges and facilitate effective design communication (Joern Ploennigs, AI Art in Architecture, 2020). Analyzing 58 million publicly available queries from Midjourney, Ploennigs (2023) further revealed that AI image generation models are widely adopted in architectural design. These AI-generated renders support creativity and unexpected discovery of ideas, expanding architects' design exploration and enhancing the overall design process (Joern Ploennigs, ANALYSING THE USAGE FO AI ART TOOLS FOR ARCHITECTURE, 2023) [2]. Additionally, the author’s study highlighted that AI-generated renders play a meaningful role in the design process when design constraints are carefully considered. These generative tools encourage an imaginative mindset, leading to the discovery of innovative ideas and enriching architectural concepts. (Joern Ploennigs, ANALYSING THE USAGE FO AI ART TOOLS FOR ARCHITECTURE, 2023) [2]. On the other hand, in his "Text-to-Image Generation: A.I. in Architecture" Oppemleander has deliberated the evolving role of AI algorithms in architectural design, particularly in text-to-image generation. He has shown ways in which AI- powered tools like DALL-E 2 and Midjourney enable architects to visualize ideas and explore a wider range of design possibilities. The focus of his paper is on how AI-generated representations enhance architects' creative abilities and promote spontaneity during the design phase [3]. Lastly, the article "Stable Diffusion, DALL-E 2, Midjourney, and Metabolic Architectures" delves into the transformative impact of generative image-making in architecture. It demonstrates the integration of AI-generated renders, stemming from designers' natural language prompts, enabling the creation of morphological images and fostering a close partnership between AI aesthetics and human cognition. The article further underlines that such AI-human partnerships elevate architectural design, offering new possibilities for bioremediating structures/components and innovative architectural concepts.

All these articles hence assist in concluding that AI-generated renders have emerged as game- changers in architectural design and visualization, empowering architects to explore, communicate, and envision ideas with unprecedented ease and creativity. The reviewed articles collectively underscore the transformative role of AI in architecture, showcasing how AI- generated renders, driven by platforms like Midjourney, DALL-E 2, and StableDiffusion, enhance the design process, support creativity, and bridge the gap between human cognition and AI aesthetics. As architects embrace the potential of AI-generated renders and continue to explore its applications, the future of architecture stands poised to embrace innovative, sustainable, and human-centric design solutions.

AI Innovations in Architectural Rendering

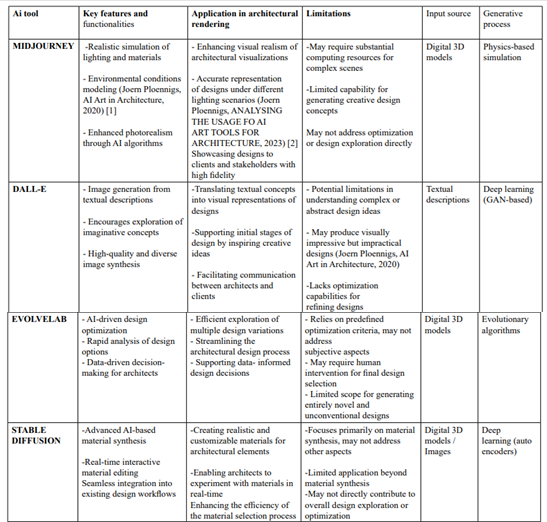

In this section, we embark on an in-depth exploration of the AI innovations that are transforming the landscape of architectural rendering. Each AI tool—Midjourney, DALL-E, EvolveLab, and Stable Diffusion—has its unique characteristics, functionalities, and underlying generative processes that contribute to its application in architectural design visualization, as introduced below:

Mi Journey

Midjourney stands at the forefront of architectural rendering by offering a powerful suite of tools to achieve unparalleled photorealism and environmental accuracy. Through its sophisticated physics-based simulation, Midjourney captures the intricacies of light propagation, material interactions, and atmospheric conditions. These capabilities enable architects and designers to create realistic renderings that closely mimic the behavior of light in real-world settings (Joern Ploennigs, AI Art in Architecture, 2020). Whether it's natural daylight flooding through a building’s windows, the interplay of light and shadows within the interior spaces, or the impact of artificial lighting, Midjourney's simulation engine empowers architects to showcase their designs with exceptional reliability. Moreover, its ability to model diverse environmental conditions allows architects to present their designs within various contexts, from daylight to night-time and in different weather scenarios.

Dall-E

DALL-E, an innovation from OpenAI, has revolutionized architectural rendering by enabling the translation of textual concepts into stunning visual representations. Leveraging the power of deep learning and Generative Adversarial Networks (GANs), DALL-E can synthesize high-quality images once textual descriptions are prompted. Architects and designers can now communicate their design ideas using natural language, and DALL-E interprets these descriptions to create photorealistic visualizations. This unique functionality not only accelerates the early stages of the design process but also encourages exploration of imaginative and unconventional architectural concepts. With DALL-E, architects can vividly portray complex and abstract ideas that may otherwise be challenging to articulate through traditional means – fostering a deeper understanding between architects, clients, and stakeholders.

Stable Diffusion

Stable Diffusion introduces a new frontier in architectural rendering with its advanced AI-based material synthesis capabilities. Through deep learning techniques like autoencoders, Stable Diffusion can generate and customize realistic materials for various architectural elements. (Joern Ploennigs, AI Art in Architecture, 2020) [1]. Architects can experiment in real-time with a vast array of materials, simulating different finishes and textures, leading to more informed material selection. (YILDIRIM, 2022) By integrating seamlessly into existing design workflows, Stable Diffusion enhances the efficiency of the material selection process and empowers architects to achieve precisely the desired visual and tactile qualities for their designs [5]. Stable Diffusion's focus on material synthesis addresses a critical aspect of architectural rendering, enriching the experience of interacting with architectural spaces.

EvolveLab: Efficient Design Optimization and DecisionMaking

EvolveLab emerges as a groundbreaking plugin for popular architectural design software like Revit and SketchUp, revolutionizing the design optimization process. With a userfriendly interface, EvolveLab seamlessly integrates into the architectural workflow, enabling architects to efficiently explore design variations directly within the 3D view of their models. Through an AI- driven approach, EvolveLab rapidly assesses design options based on predefined criteria and prompt-driven inputs. Architects can interactively adjust and optimize key design parameters, such as building dimensions, materials, and lighting conditions, directly within the 3D model. Evolve Lab's evolutionary algorithms intelligently adapt to architects' preferences and prompt inputs, generating optimized design solutions that align with the desired outcomes [6]. This streamlined approach empowers architects to make data-driven decisions, balancing creative aspirations with practical considerations. Moreover, EvolveLab's real-time rendering capabilities allow architects to visualize and assess the impact of design changes instantly. This fosters an iterative design process, where architects can refine and fine-tune their designs on-the-fly, leading to well-informed and optimized architectural solutions. By offering an intuitive and interactive experience, EvolveLab greatly enhances the efficiency of the architectural design process, promoting better-informed decisionmaking, and ultimately leading to more innovative and optimized designs. In the subsequent sections, we will further explore the capabilities and applications of EvolveLab, along with its synergies and unique contributions to the realm of architectural rendering.

Features and Functionalities in Architectural Rendering

In the realm of architectural rendering, each AI innovation— Midjourney, DALL-E, EvolveLab, and Stable Diffusion—offers a unique set of features and functionalities that elevate the visualization and design process for architects. Let's explore these innovations in the context of architectural rendering:

Detailed Analysis of Midjourney's Features

Midjourney introduces a suite of powerful features tailored for architects seeking unmatched realism and environmental accuracy in their visualizations.

Lighting Simulation Midjourney's advanced physics-based simulation accurately models the behavior of light within architectural spaces. Architects can visualize how natural and artificial lighting interacts with their designs, resulting in captivating and true-to-life renderings.

Material Interactions Midjourney's simulation considers material properties, accurately depicting how they reflect and transmit light. This enables architects to showcase a wide range of materials with various finishes, ensuring visual accuracy in their design presentations.

Environmental Conditions With Midjourney, architects can explore different environmental scenarios such as weather conditions, time of day, and seasonal changes. This functionality aids in understanding how designs respond to different lighting and atmospheric contexts. (YILDIRIM, 2022) [5]

Daylight Analysis Midjourney offers in-depth daylight analysis, empowering architects to optimize their designs for natural light utilization and energy efficiency. This feature supports sustainable architectural practices.

Unpacking DALL-E's Capabilities DALL-E's transformative capabilities lie in its text-toimage synthesis, redefining how architects communicate and conceptualize their designs:

Text-to-Image Synthesis Architects can describe design concepts using natural language, and DALL-E converts these textual prompts into realistic visual representations. This functionality accelerates the early stages of design ideation and facilitates clearer communication with clients.

Exploring Imagination DALL-E encourages architects to explore their imaginative and creative ideas. By visualizing abstract concepts described in text, architects gain novel perspectives and inspiration for their architectural visions.

Diverse Image Synthesis DALL-E can generate diverse and high-quality images, allowing architects to rapidly explore numerous design alternatives. This fast iteration process aids in decision-making and the exploration of design variations.

Understanding EvolveLab's Functionalities

As a plugin for Revit and SketchUp, EvolveLab simplifies design optimization and rendering, offering intuitive features for architects:

Interactive Design Optimization

EvolveLab allows architects to interactively explore design variations directly within the three- dimensional (3D) view of their models. Evolutionary algorithms adapt to user inputs, generating optimized design solutions based on specified criteria. (Yutong Xie, 2023) [6]

Prompt-Driven Rendering

Architects can guide the rendering process using specific prompts, tailoring the visual outcomes to meet design objectives. This prompt-based approach leads to data-driven design decisions.

Real-Time Rendering

EvolveLab's real-time rendering capabilities empower architects to visualize design changes instantaneously. This iterative process facilitates quick refinements and enhances the exploration of design possibilities.

Delving into Stable Diffusion's Material Synthesis

Stable Diffusion revolutionizes architectural material selection and customization through advanced AI-based functionalities:

AI-Generated Materials

Utilizing deep learning techniques like autoencoders, Stable Diffusion generates realistic and customizable materials for architectural elements. Architects can experiment with a wide array of finishes and textures effortlessly.

Seamless Integration

Stable Diffusion seamlessly integrates into existing design workflows, providing architects with a user-friendly and efficient material selection process.

Applications in Architectural Rendering

In this section, we explore how each AI innovation—Midjourney, DALL-E, EvolveLab, and Stable Diffusion—finds practical applications in architectural rendering, revolutionizing the way architects visualize and communicate their designs.

Realistic Visualization with Midjourney

Midjourney's advanced physics-based simulation and lighting accuracy make it an invaluable tool for architects aiming to achieve unparalleled realism in their visualizations. The applications of Midjourney in architectural rendering are extensive:

Photorealistic Presentations

Architects can create visually stunning presentations that showcase their designs with exceptional realism. Midjourney's precise lighting simulation accurately portrays the interplay of light and shadows, delivering captivating visual experiences.

Daylight Analysis

Architects can conduct in-depth daylight analysis to optimize building designs for natural illumination. By understanding how light interacts with architectural elements, architects can enhance energy efficiency and occupant comfort.

Creative Applications of DALL-E in Architectural Designs

DALL-E's transformative ability to generate images from textual prompts opens up new creative avenues for architects, enhancing design ideation and communication:

Conceptual Design Exploration

Architects can experiment with creative and abstract design concepts by providing textual descriptions to DALL-E. This encourages a more fluid and imaginative approach to design, leading to unconventional yet inspiring architectural visions. (Joern Ploennigs, AI Art in Architecture, 2020) [1].

Visualizing Textual Briefs

When clients provide design briefs in the form of text, architects can utilize DALL-E to quickly translate these descriptions into vivid visualizations. This not only expedites the design process but also fosters better client understanding and engagement. DALL-E empowers architects to create compelling design narratives through textual and visual storytelling. This combination of language and imagery enhances the presentation of design concepts to clients and stakeholders.

Streamlining Design

Process with EvolveLab EvolveLab's interactive design optimization and rendering functionalities significantly streamline the architectural design process:

Efficient Design Iteration

Architects can rapidly explore multiple design variations within the 3D view of their models using EvolveLab. This iterative approach allows for quick refinements and data-driven decision-making.

Design Optimization for Performance

Metrics EvolveLab enables architects to optimize designs based on specific performance metrics such as cost, energy efficiency, or spatial functionality. This results in well-informed design decisions that align with project objectives.

Client Collaboration and Feedback

Architects can use EvolveLab to collaborate with clients during the design process. The real-time rendering capabilities facilitate prompt feedback and allow clients to visualize design changes instantly.

Material Innovation using Stable Diffusion

Stable Diffusion's AI-based material synthesis enriches the architectural material selection process:

Material Customization

Architects can experiment with an extensive range of materials and finishes, tailoring them to suit the design's aesthetic and functional requirements. This empowers architects to create unique and visually appealing architectural spaces. Stable Diffusion facilitates rapid prototyping of material choices, reducing time spent on physical material sampling. Architects can virtually visualize and assess various material combinations, leading to more efficient material selection.

Sustainable Material Exploration

Architects can explore sustainable material alternatives using Stable Diffusion's generative capabilities. This aligns with the growing emphasis on environmentally conscious design practices. Generative Processes in Architectural Rendering The heart of AI innovations in architectural rendering lies in their underlying generative processes. Each AI tool—Midjourney, DALL-E, EvolveLab, and Stable Diffusion—employs distinct methodologies to create visual representations and optimize architectural designs.

Physics-based Simulation in Midjourney

Midjourney's generative process revolves around its sophisticated physics-based simulation engine. By simulating the behavior of light, materials, and environmental conditions, Midjourney achieves remarkable realism in architectural visualizations:Midjourney traces the paths of light rays as they interact with architectural elements. This enables accurate portrayal of shadows, reflections, and diffused lighting, resulting in visually convincing renderings. (Joern Ploennigs, AI Art in Architecture, 2020)

9.1 Material Interactions Through physics-based simulations, Midjourney replicates how light interacts with various materials. This includes surface properties such as reflectivity, transparency, and diffusion, contributing to the lifelike appearance of materials in the visualizations.

9.2 Environmental Dynamics Midjourney models environmental conditions such as weather, time of day, and seasonal changes. Architects can visualize their designs within different contexts, allowing them to understand how the building responds to varying lighting and atmospheric conditions. (Joern Ploennigs, AI Art in Architecture, 2020)[1].

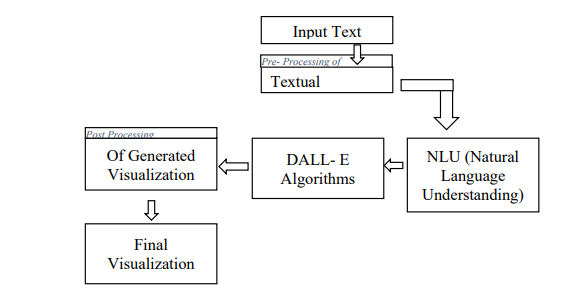

9.3 Deep Learning (GAN-based) Image Synthesis with DALL-E DALL-E's generative process harnesses the power of deep learning and Generative Adversarial Networks (GANs) to create visual representations from textual descriptions:

9.4 Encoder-Decoder Framework DALL-E utilizes an encoder-decoder architecture to map textual descriptions into a latent space and then decode them into visual images. The encoder captures semantic information, while the decoder synthesizes the images based on the encoded text.

9.5 Generative Adversarial Networks (GANs) GANs play a crucial role in DALL-E's image synthesis. A generator network produces images from random noise (latent vectors), while a discriminator network tries to differentiate between generated images and real images. The generator continually refines its output to deceive the discriminator, leading to increasingly realistic images.

9.6 Imagination Exploration By exploring a vast latent space during the decoding process, DALL-E can visualize diverse design concepts, even those that are abstract and difficult to depict through traditional methods.

9.7 Evolutionary Algorithms in EvolveLab EvolveLab's generative process is driven by evolutionary algorithms, revolutionizing design optimization and exploration:

9.8 Population-Based Search EvolveLab maintains a population of design solutions (individuals) and iteratively evolves them through generations. Each generation undergoes selection, mutation, and crossover processes to generate new design variations.

9.9 Objective Function Optimization Architects define objective functions to evaluate the fitness of each design solution. EvolveLab uses these functions to measure design performance against specific criteria, enabling architects to optimize their designs based on desired outcomes.

9.10 Data-Driven Design By iteratively evolving designs based on fitness evaluations, EvolveLab supports data-driven decision-making, guiding architects towards solutions that meet predefined design objectives.

9.11 Deep Learning (Autoencoders) in Stable Diffusion Stable Diffusion employs deep learning, specifically autoencoders, for material synthesis, offering a cutting-edge generative process:

9.12 Autoencoders Autoencoders consist of an encoder and a decoder network. The encoder compresses input data (material properties) into a lowdimensional latent representation, and the decoder reconstructs the input from the latent space. Stable Diffusion's autoencoders enable material synthesis by generating realistic material properties from the latent space.

Material Customization

The autoencoder's latent space allows architects to explore a broad range of material variations by modifying latent vectors. This results in the generation of novel and customizable materials that can be applied to architectural elements. (Dollens, 2023)

Real-Time Material Editing

Architects can interactively edit material properties, immediately visualizing changes and their impact on the design. This facilitates informed decisions during the material selection process.

Future Directions and Ethical Considerations in Architectural Rendering

As AI continues to advance, the future of architectural rendering holds exciting possibilities. However, along with these potential advancements, there are ethical considerations that architects and the AI community must address to ensure responsible and beneficial use of AI in architectural design and visualization.

Potential Advancements in AI for Architectural Rendering

The future of AI in architectural rendering is likely to witness significant advancements that further enhance the design process and visualization capabilities

Real-Time Interactive Rendering

AI-driven real-time rendering will enable architects to visualize design changes instantly, fostering a more iterative and efficient design process. This will empower architects to make faster and more informed decisions during the design development stage.

Advanced Generative Models

AI innovations may lead to more sophisticated generative models, surpassing current capabilities. Enhanced models could provide even more realistic renderings and explore abstract design concepts with greater fidelity, opening up new realms of creativity for architects.

Intelligent Design Assistance

AI tools might evolve into intelligent design assistants, providing context-aware suggestions and design optimizations. These assistants could help architects generate design solutions that align with specific project requirements, such as sustainability or user experience.

Virtual Reality (VR) Integration AI-powered

VR applications could enable architects and clients to experience designs in immersive virtual environments. VR integration may revolutionize the architectural presentation process, enhancing stakeholder engagement and understanding. (Joern Ploennigs, ANALYSING THE USAGE FO AI ART TOOLS FOR ARCHITECTURE, 2023) [2].

AI-Driven Material Innovations Future

AI innovations might enable architects to explore novel and sustainable materials through advanced material synthesis techniques. This could lead to more environmentally conscious and innovative building designs.

Ethical Implications of AI in Architectural Design and Visualization

As architects embrace AI technologies in design and visualization, it is crucial to consider the ethical implications and potential challenges:

Human-Centered Design

While AI can expedite design processes, architects must ensure that human-centered design principles remain at the core. AI should augment human creativity and not replace human judgment and empathy in architectural design.

Data Privacy and Security

As AI tools collect and analyze design data, safeguarding sensitive information becomes paramount. Architects should prioritize data privacy and implement robust security measures to protect both client and project data.

Transparency and Explainability

AI algorithms can be complex and difficult to interpret. Ethical considerations demand that AI models used in architectural rendering should be transparent and explainable, enabling architects to understand how decisions are made.

Responsibility and Accountability

Architects must take responsibility for AI-generated designs and outcomes. Human oversight and accountability are essential to avoid potential design flaws or unintended consequences that AI may introduce.

Sustainability and Environmental Impact

AI in architectural rendering should contribute to sustainable design practices. Architects must ensure that AI tools support environmentally responsible decision-making and reduce the carbon footprint of design iterations.

Responsible Use of AI-Generated Content

AI-generated content, such as images and visualizations, should be used responsibly, considering copyright, licensing, and attribution concerns to respect intellectual property rights.

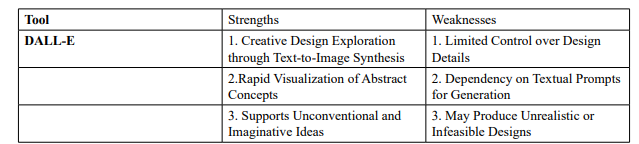

Comparative Analysis of DALL-E, Midjourney, and EvolveLab

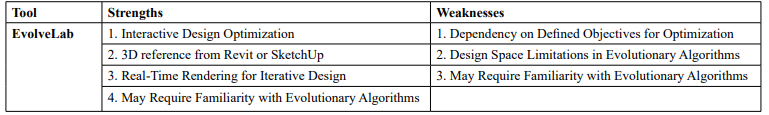

Strengths and Weaknesses: DALL-E

Flow Chart

The flowchart represents the multi-step process of DALL-E, including pre-processing of textual description, natural language understanding (NLU), the DALL-E algorithm itself, and postprocessing of the generated visualization.

EvolveLab

Flow Chart

The flowchart represents the multi-step process of EvolveLab, including data analysis, the use of evolutionary algorithms, evaluation of generated designs, and post-optimization analysis.

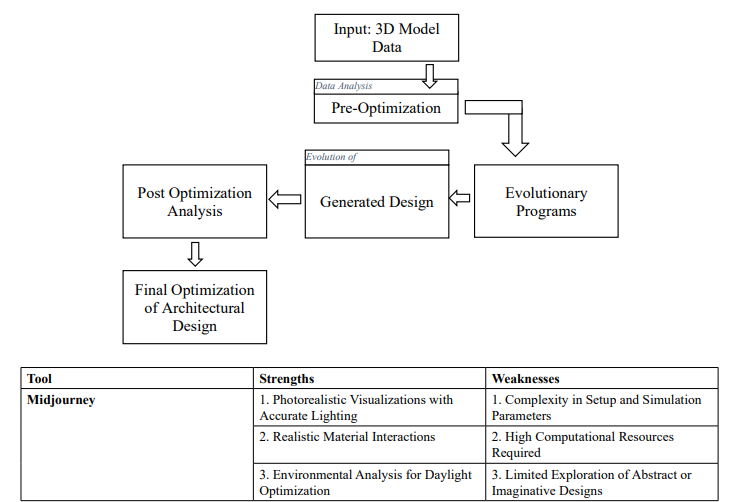

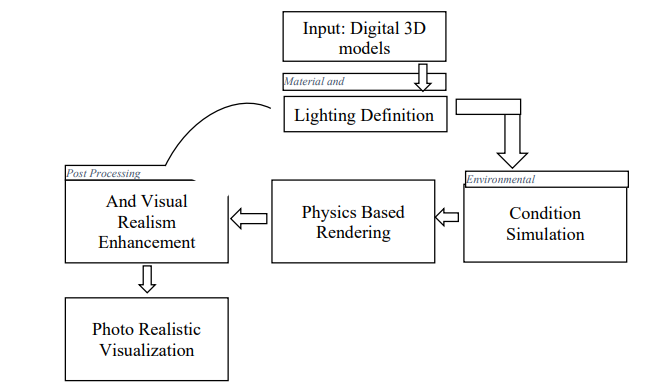

Flowchart

The flowchart represents the multi-step process of Midjourney, including material and lighting definition, environmental condition simulation, physics-based rendering, and post-processing for enhancing visual realism.

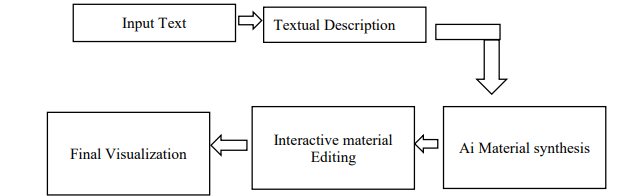

Stable Diffusion

Flow Chart

The tree-like flowchart represents the multi-step process of Stable Diffusion, including AI-based material synthesis, interactive material editing, and real-time rendering of architectural materials.

Evolve Lab in Interior Rendering (Revit)

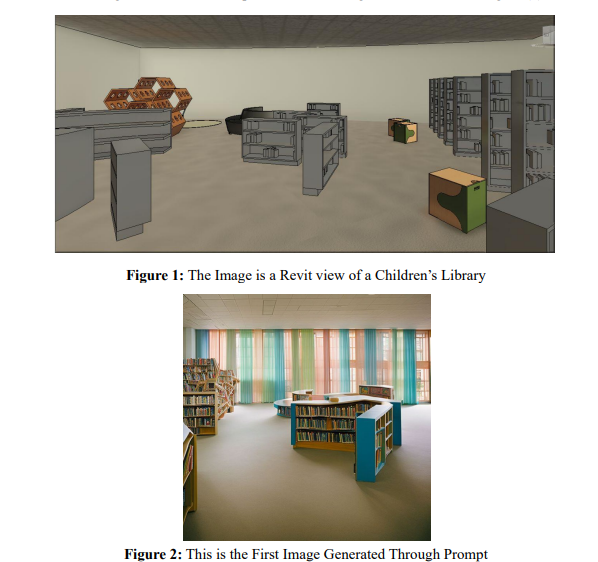

This is a project that we were doing, this is an interior space of a Children’s Library with a very Basic Furniture and 3D model in the Original View, which is Figure (1)

Figure 3: The Second Image Generated Through Prompt

In figure 2, the image prompt was “A children’s library with pastel color scheme and curtains and windows”. This created Figure 2. Also, the “interior Command was on in this case study.

In Figure 3, The image prompt was “A children’s library with bright colored furniture and colorful carpets and a window in background”. Also, the “interior Command was on in this case study.

Evolve Lab in Exterior Rendering (Revit)

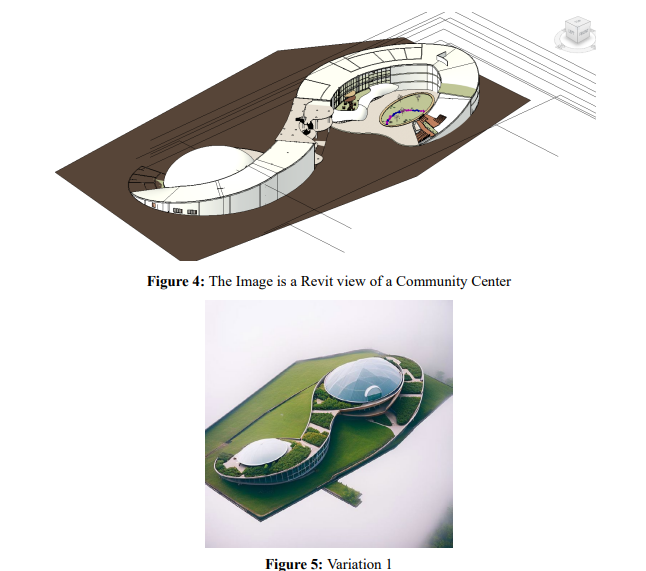

This is a community center building with an aerial view. In Figure 4. The prompts were targeted to achieve a building with green roof and surroundings, with also Glass dome.

Figure 8: Variation 4

Conclusion

The transformative impact of AI in architectural rendering is evident through the comparative study of three innovative technologies: DALL-E, Midjourney, and EvolveLab. Each tool brings unique features and functionalities that elevate the visualization and design process for architects, fostering enhanced creativity and efficiency. While DALL-E excels in translating textual concepts into stunning visual representations, Midjourney stands out with its photorealistic rendering and environmental accuracy. On the other hand, EvolveLab empowers architects with interactive design optimization and real-time rendering for iterative design improvements. Throughout the research, we have explored the strengths and weaknesses of each AI innovation, recognizing their potential to reshape architectural practice. DALL-E's creative exploration and rapid visualization of abstract concepts can inspire imaginative design solutions, although it may require further improvements in controlling design details. Midjourney's realistic simulation of lighting, materials, and environmental conditions greatly enhances visual realism, but it might demand substantial computing resources for complex scenes. EvolveLab's AI-driven design optimization streamlines the architectural design process, but it relies on predefined objectives and might benefit from additional support for generating novel designs. Furthermore, the case studies demonstrate the practical applications of EvolveLab in interior and exterior design scenarios, showcasing its effectiveness in exploring various design variations and making data-driven decisions. Stable Diffusion, with its advanced AI-based material synthesis and real-time interactive editing, adds significant value to the material selection process, but its limitations lie in the focus on material generation without addressing broader design exploration. As AI technologies continue to advance, the future of architectural rendering holds promising advancements, including real-time interactive rendering, advanced generative models, and intelligent design assistance. However, architects and the AI community must address ethical considerations, ensuring AI tools support human-centered design principles, prioritize data privacy and security, and remain transparent and explainable in their decision-making.

In a nutshell, the comparative study of DALL-E, Midjourney, and EvolveLab has shed light on the transformative role of AI in architectural rendering. By leveraging the capabilities of these AI tools, architects can enhance their creativity, realism, and efficiency in architectural practice, leading to more innovative, sustainable, and human-centric design solutions for the built environment.

References

1. Ploennigs, J., & Berger, M. (2023). Diffusion models for computational design at the example of floor plans. arXiv preprint arXiv:2307.02511.

2. Joern Ploennigs, M. B. (2023). ANALYSING THE USAGE FO AI ART TOOLS FOR ARCHITECTURE. European Conference on Computing in Construction, 8.

3. Paananen, V., Oppenlaender, J., & Visuri, A. (2024). Using text-to-image generation for architectural design ideation. International Journal of Architectural Computing, 22(3), 458-474.

4. Radhakrishnan, A. M. (2023). Is Midjourney-AI a new antihero of architectural imagery and creativity. GSJ, 11(1), 94- 104.

5. YILDIRIM, E. (2022, December). Art and Architecture: Theory, Practice and Experience. (A. P. Kozlu, Ed.) Livre e Dyon, 24.

6. Xie, Y., Pan, Z., Ma, J., Jie, L., & Mei, Q. (2023, April). A prompt log analysis of text-to-image generation systems. In Proceedings of the ACM Web Conference 2023 (pp. 3892- 3902).

7. Borji, A. (2023). Generated faces in the wild: quantitative comparison of stable diffusion, MidJourney and DALL-E 2.(2022). arXiv. org. https://arxiv. org/abs/2210.00586. Accessed, 9