Research Article - (2024) Volume 5, Issue 3

Ethical AI in Information Technology: Navigating Bias, Privacy, Transparency, and Accountability

Received Date: Jul 01, 2024 / Accepted Date: Aug 05, 2024 / Published Date: Sep 09, 2024

Copyright: ©2024 Dimitrios Sargiotis. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Sargiotis, D. (2024). Ethical AI in Information Technology: Navigating Bias, Privacy, Transparency, and Accountability. Adv Mach Lear Art Inte, 5(3), 01-14.

Abstract

The rapid advancement of artificial intelligence (AI) technologies has fundamentally transformed the landscape of information technology (IT), offering unprecedented opportunities for innovation and efficiency. However, these advancements also bring significant ethical challenges, including issues of bias, privacy, transparency, and accountability. This paper explores these ethical challenges and proposes a comprehensive ethical framework for the responsible development and deployment of AI in IT. Through an examination of historical context, current trends, and detailed case studies, the framework aims to provide actionable guidelines to mitigate biases, protect privacy, enhance transparency, and ensure accountability in AI systems. By fostering ethical AI practices, this framework aspires to support the sustainable and equitable advancement of AI technologies, ultimately benefiting society as a whole.

Keywords

Artificial Intelligence, Information Technology, Ethical AI, Bias in AI, Data Privacy, Transparency, Accountability, Ethical Framework, AI in IT, Machine Learning, AI Governance, Explainable AI, Privacy Protection, Algorithmic Fairness, Ethical Guidelines, AI Systems, Data Security, AI Decision-Making, AI Regulation, Responsible AI Development

Abbreviations

AI: Artificial Intelligence

IT: Information Technology

GPS: General Problem Solver

LIME: Local Interpretable Model-agnostic Explanations

SHAP: SHapley Additive Explanations

COMPAS: Correctional Offender Management Profiling for Alternative Sanctions

GDPR: General Data Protection Regulation

XAI: Explainable AI

NLP: Natural Language Processing

ML: Machine Learning

IEEE: Institute of Electrical and Electronics Engineers

Glossary

Artificial Intelligence (AI): The simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, and self-correction.

Information Technology (IT): The use of computers to store, retrieve, transmit, and manipulate data or information. IT is typically used within the context of business operations.

Machine Learning (ML): A subset of AI that involves the use of algorithms and statistical models to enable computers to perform specific tasks without using explicit instructions, relying instead on patterns and inference.

General Problem Solver (GPS): An early artificial intelligence program created by Allen Newell and Herbert A. Simon that aimed to mimic human problem-solving processes. Local Interpretable Model-agnostic Explanations (LIME): A technique that explains the predictions of any classifier in an interpretable and faithful manner, by learning an interpretable model locally around the prediction.

SHapley Additive exPlanations (SHAP): A method based on cooperative game theory to explain the output of machine learning models by assigning each feature an importance value for a particular prediction.

Correctional Offender Management Profiling for Alternative Sanctions (COMPAS): A risk assessment tool used in the criminal justice system to predict the likelihood of a defendant reoffending.

General Data Protection Regulation (GDPR): A regulation in EU law on data protection and privacy in the European Union and the European Economic Area, also addressing the transfer of personal data outside the EU and EEA areas.

Explainable AI (XAI): Techniques and methods used in the application of artificial intelligence such that the results of the solution can be understood by human experts. Natural Language Processing (NLP): A subfield of AI that focuses on the interaction between computers and humans through natural language, enabling computers to understand, interpret, and generate human language.

Bias in AI: The presence of systematic and unfair discrimination in AI systems that results from the training data or the algorithm itself, leading to prejudiced outcomes.

Transparency in AI: The extent to which the processes behind AI systems and their decision-making are visible and understandable to stakeholders, fostering trust and accountability.

Data Privacy: The aspect of information technology that deals with the ability of individuals to control and protect their personal information and how it is collected, used, and shared.

Algorithmic Fairness: The aspect of information technology that deals with the ability of individuals to control and protect their personal information and how it is collected, used, and shared.

Adversarial Debiasing: A technique in machine learning that aims to reduce bias in AI models by adjusting the training process to counteract bias.

Differential Privacy: A privacy-preserving technique used in data analysis that adds statistical noise to datasets to prevent the identification of individuals within the data.

Ethical AI: The practice of designing, developing, and deploying AI technologies in a manner that is aligned with ethical principles such as fairness, accountability, transparency, and respect for human rights.

Predictive Policing: The use of AI and data analytics to predict and prevent potential criminal activity based on historical crime data and other relevant information.

Deep Learning: A subset of machine learning involving neural networks with many layers (deep networks) that can learn from vast amounts of data and are particularly effective in tasks like image and speech recognition.

Introduction

Overview of AI advancements in IT

The rapid evolution of AI technologies has revolutionized the IT landscape, providing unprecedented opportunities for innovation and efficiency. From automating routine tasks to enabling sophisticated data analytics, AI has fundamentally transformed how organizations operate and deliver value. In particular, AI's ability to process vast amounts of data at high speeds has unlocked new possibilities for personalized services, predictive maintenance, and intelligent decision-making across various industries. However, as AI becomes increasingly integrated into IT systems, it brings with it a host of ethical challenges that must be carefully addressed. These challenges stem from the inherent complexities of AI technologies, including their reliance on large datasets, sophisticated algorithms, and often opaque decisionmaking processes. Among the most pressing ethical concerns are issues of bias, privacy, transparency, and accountability.

Ethical Challenges in Ai Integration

The integration of artificial intelligence (AI) into information technology (IT) systems brings several ethical challenges that must be carefully considered to ensure the responsible development and deployment of AI technologies. These challenges include issues related to bias, privacy, transparency, and accountability.

Methodology: Literature Review

The methodology for this paper involves a detailed literature review to explore the ethical challenges of AI integration in information technology. The literature review process is systematic and comprehensive, aiming to gather, analyze, and synthesize relevant information from various sources. The following subsections outline the approach and steps taken in conducting the literature review.

Formulation of Research Questions

The research questions guiding this literature review are focused on identifying and addressing the ethical challenges related to AI, particularly in terms of bias, privacy, transparency, and accountability. These questions include:

• What are the primary ethical concerns associated with AI in IT?

• How can bias in AI algorithms be identified and mitigated?

• What measures can be implemented to ensure data privacy in AI systems?

• How can transparency and accountability be enhanced in AI development and deployment?

Literature Search Strategy

A thorough search strategy was developed to identify relevant academic papers, industry reports, and regulatory documents. Databases such as Google Scholar, IEEE Xplore, PubMed, and the ACM Digital Library were utilized. Keywords and phrases included "ethical AI," "AI bias," "data privacy in AI," "AI transparency," and "accountability in AI."

Inclusion and Exclusion Criteria

Specific inclusion and exclusion criteria were established to ensure the relevance and quality of the literature. Only peer-reviewed journal articles, conference papers, and authoritative reports published within the last two decades were included. Publications that did not directly address the ethical aspects of AI in information technology were excluded.

Synthesis of Findings

The extracted data was synthesized to provide a comprehensive overview of the current state of research on ethical AI. This involved comparing and contrasting different perspectives, identifying

Data Extraction and Analysis

Relevant information was extracted from the selected literature, focusing on definitions, methodologies, findings, and recommendations related to ethical AI. The data was organized into themes corresponding to the major ethical challenges identified: bias, privacy, transparency, and accountability common themes and gaps in the literature, and integrating insights from various sources to construct a coherent narrative.

Validation and Cross-Referencing

To ensure the validity of the findings, cross-referencing was performed with seminal works and widely recognized guidelines in the field of AI ethics. This step helped to validate the conclusions drawn from the literature review and ensure alignment with established ethical principles.

Literature Review

The integration of AI into IT can be traced back to the early development of computing technologies. Early AI systems, developed in the mid-20th century, were limited in scope and capability, often constrained by the computational power and data availability of the time. However, these early systems laid the groundwork for modern AI by demonstrating the potential of machines to perform tasks that typically required human intelligence, such as problem-solving and pattern recognition [1].

Early AI Developments

One of the earliest milestones in AI was the creation of the Logic Theorist by Allen Newell and Herbert A. Simon. This program was capable of proving mathematical theorems and is considered one of the first successful demonstrations of artificial intelligence [2]. Another significant early development was the General Problem Solver (GPS), also by Newell and Simon, which aimed to solve a wide range of problems using a similar approach [3]. Despite these early successes, AI development experienced several periods of reduced funding and interest, often referred to as "AI winters." These periods were characterized by the realization that early AI systems were unable to deliver on their ambitious promises due to limitations in computational power, algorithmic efficiency, and understanding of human cognition [4].

Emergence of Machine Learning

The resurgence of AI in the 1980s and 1990s was driven by advancements in machine learning, a subfield of AI focused on developing algorithms that can learn from data. The development of backpropagation algorithms for training neural networks marked a significant breakthrough, enabling more complex models and applications [5]. During this period, expert systems, which used rule-based logic to simulate human decision-making, also gained popularity in various industries [6].

The Big Data Era

The early 2000s saw the advent of big data, characterized by the exponential growth of data generated by digital technologies and the internet. This era provided the necessary fuel for modern AI systems, allowing machine learning algorithms to be trained on vast datasets and improving their performance significantly [7]. The combination of increased computational power, sophisticated algorithms, and abundant data led to breakthroughs in natural language processing, computer vision, and other AI applications.

Recent Advances and Ethical Implications

Recent advancements in AI, particularly in deep learning, have expanded the potential applications of AI across various domains, from healthcare to finance to transportation [8]. However, these advancements have also brought to light significant ethical implications. The use of AI in decision-making processes, such as hiring and law enforcement, has raised concerns about bias and discrimination [9]. Additionally, the widespread collection and analysis of personal data by AI systems have heightened privacy concerns [10].

As AI continues to evolve, it is essential to address these ethical challenges by developing frameworks and guidelines that ensure the responsible use of AI technologies. This paper aims to contribute to this ongoing discourse by proposing a comprehensive ethical framework for AI in IT, informed by the historical context and current trends in AI development.

Current Trends

Recent developments in AI have led to significant improvements in various IT applications, such as data analytics, cybersecurity, and user interface design. However, these advancements have also raised concerns about ethical issues such as data privacy, algorithmic bias, and the potential for misuse. This section explores current trends in AI and their ethical considerations.

Data Analytics AI-driven data analytics has transformed how organizations process and interpret vast amounts of information. Machine learning algorithms can now uncover patterns and insights from data that were previously inaccessible, leading to improved decision-making and operational efficiency. For example, predictive analytics is used in healthcare to forecast disease outbreaks and in finance to predict market trends [7]. However, the use of AI in data analytics raises ethical concerns about privacy and data security, particularly when dealing with sensitive personal information.

User Interface Design AI technologies have revolutionized user interface (UI) design by enabling more personalized and intuitive interactions. AI-driven interfaces, such as chatbots and virtual assistants, leverage natural language processing and machine learning to provide users with tailored experiences [13]. While these advancements enhance user satisfaction and engagement, they also raise ethical questions about data collection, consent, and the potential for manipulation. Ensuring that users are aware of how their data is used and that they have control over their interactions with AI-driven interfaces is crucial [14].

Ethical Considerations

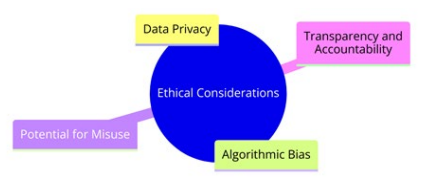

The rapid adoption of AI in these and other areas has highlighted several ethical considerations that need to be addressed:

• Data Privacy: As AI systems increasingly rely on large datasets, protecting the privacy of individuals becomes paramount. This involves implementing robust data protection measures and ensuring transparency in data collection and usage practices [15].

• Algorithmic Bias: AI systems can perpetuate and amplify existing biases if not carefully managed. This can lead to unfair treatment of certain groups and reinforce societal inequalities. Addressing algorithmic bias requires diverse training datasets and ongoing evaluation of AI models [16].

• Potential for Misuse: The dual-use nature of AI technologies means they can be employed for both beneficial and harmful purposes. Ensuring that AI is used responsibly involves creating regulatory frameworks that prevent misuse and promote ethical development and deployment [12].

• Transparency and Accountability: Transparency in AI decisionmaking processes is essential for building trust and ensuring accountability. This involves developing explainable AI models and establishing clear guidelines for responsibility and redress in case of errors or harm [17].

Figure 1 illustrates the main topic, 'Ethical Considerations,' at the center of the diagram. Branching out from the central node are four primary subtopics. The first subtopic is "Data Privacy," which emphasizes the importance of protecting personal information in various applications. The second subtopic is "Algorithmic Bias," highlighting the need to address and mitigate biases that can be embedded in algorithms, potentially leading to unfair outcomes. The third subtopic is "Potential for Misuse," which considers the various ways technologies and data can be misused, raising concerns about ethical implications and the necessity for safeguards. The final subtopic is "Transparency and Accountability," which stresses the importance of making processes and decisions transparent and ensuring that entities are held accountable for their actions.

Figure 1: Ethical Considerations

Existing Ethical Frameworks

Existing ethical frameworks for AI provide a foundation for addressing the challenges posed by AI integration in IT. This section reviews key ethical principles, such as fairness, accountability, and transparency, and discusses their application in the context of AI in IT.

Fairness Fairness is a crucial principle in AI ethics, aimed at ensuring that AI systems do not produce biased or discriminatory outcomes. This involves both procedural fairness, which focuses on the processes used to develop and implement AI systems, and substantive fairness, which concerns the outcomes generated by these systems [18]. In the context of AI in IT, fairness requires that datasets are representative of the diverse populations affected by AI decisions and that algorithms are designed to minimize biases.

For example, in hiring algorithms, fairness can be promoted by using diverse training datasets that include various demographic groups and by regularly auditing the algorithms to detect and mitigate any biases that might arise [19]. Ensuring fairness in AI systems helps prevent discrimination and promotes equal opportunities for all individuals.

Accountability Accountability in AI involves establishing mechanisms to ensure that individuals and organizations can be held responsible for the actions and decisions made by AI systems. This includes creating clear lines of responsibility and ensuring that there are processes in place for addressing any harm caused by AI systems [20]. In the IT sector, accountability is crucial for maintaining trust and ensuring that AI systems are used ethically and responsibly.

One approach to enhancing accountability is to implement regular audits and evaluations of AI systems to assess their performance and identify any potential issues. Additionally, organizations should establish clear policies for responding to incidents where AI systems cause harm, including providing mechanisms for redress and compensation [21]. By fostering accountability, organizations can ensure that AI systems are aligned with ethical standards and societal expectations.

Transparency Transparency in AI involves making the decision-making processes of AI systems understandable and accessible to users and stakeholders. This is essential for building trust and enabling informed decision-making by individuals affected by AI systems [17]. In the context of AI in IT, transparency can be achieved by developing explainable AI (XAI) models that provide clear and understandable explanations for their decisions.

Techniques such as Local Interpretable Model-agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP) can help make AI systems more transparent by highlighting the factors that influence their decisions [22]. Additionally, providing comprehensive documentation and clear communication about how AI systems operate can further enhance transparency. Ensuring transparency helps users understand how AI systems make decisions and fosters greater trust in their use.

Ethical Principles and Guidelines Several organizations and initiatives have developed ethical principles and guidelines for AI to promote fairness, accountability, and transparency. For example, the European Commission’s Ethics Guidelines for Trustworthy AI emphasize the importance of respecting human autonomy, preventing harm, ensuring fairness, and promoting transparency [23]. Similarly, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems provides a framework for addressing ethical issues in AI and promoting responsible development and deployment [24]. These frameworks and guidelines provide valuable resources for organizations seeking to develop and implement AI systems ethically. By adhering to these principles, organizations can ensure that their AI systems are aligned with societal values and contribute positively to society.

Ethical Challenges in AI Integration

Bias in AI Algorithms

AI systems learn from data, and if the training data contains biases, the AI will likely reproduce and even amplify these biases. This can lead to unfair outcomes in critical areas such as hiring, lending, and law enforcement. For example, facial recognition systems have been shown to have higher error rates for certain demographic groups, raising concerns about discrimination and equity. Addressing bias in AI involves not only improving the diversity and quality of training data but also developing algorithms that can identify and mitigate biases [9].

Hiring Practices In hiring, AI systems are increasingly used to screen resumes and predict job performance. However, these systems can inherit biases present in historical hiring data, potentially disadvantaging candidates from underrepresented groups. Studies have shown that AI systems trained on biased datasets can replicate gender and racial biases, leading to discriminatory hiring practices [19].

Lending Decisions Similarly, in the lending industry, AI algorithms used to assess creditworthiness can produce biased outcomes if they rely on data that reflects historical discrimination. For instance, minority groups may be unfairly denied loans or offered less favorable terms based on biased credit scoring models [25]. Addressing these biases requires careful examination and adjustment of the training data, as well as the implementation of fairness constraints in the algorithms.

Law Enforcement

In law enforcement, AI systems such as predictive policing tools and facial recognition software have been criticized for their potential to perpetuate existing biases. Research has shown that these systems can disproportionately target minority communities, leading to over-policing and wrongful arrests. Mitigating these biases involves not only improving data collection practices but also ensuring that AI systems are subject to rigorous ethical standards and oversight.

Strategies for Mitigation To mitigate bias in AI systems, several strategies can be employed:

• Diverse and Representative Data: Ensuring that training datasets are diverse and representative of all demographic groups is crucial. This includes actively seeking out and including data from underrepresented groups to balance the training set.

• Algorithmic Fairness Techniques: Developing and applying algorithmic techniques to detect and reduce bias can help create fairer AI systems. Techniques such as reweighting, fairness constraints, and adversarial debiasing are valuable tools in this effort [26].

• Human Oversight: Incorporating human oversight in AI decision-making processes can help identify and correct biases that algorithms may overlook. This includes regular audits and evaluations of AI systems by diverse teams of experts.

• Transparency and Accountability: Ensuring transparency in AI development and deployment processes can help build trust and facilitate the identification of biases. This involves clear documentation of data sources, algorithmic design, and decisionmaking criteria.

Fig. 2 illustrates various methods to address bias in AI systems. At the central theme is "Strategies for Mitigation," from which four main strategies branch out. The first strategy is "Diverse and Representative Data," emphasizing the importance of using training datasets that are inclusive and representative of all demographic groups. This involves actively including data from underrepresented groups to balance the training set. The second strategy is "Algorithmic Fairness Techniques," which involves developing and applying methods to detect and reduce bias. Techniques such as reweighting, fairness constraints, and adversarial debiasing are crucial tools for this purpose, as noted by Kamiran, Calders, and Pechenizkiy [26]. The third strategy is "Human Oversight," which advocates for incorporating human oversight in AI decision-making processes to identify and correct biases that algorithms may miss. This includes regular audits and evaluations of AI systems by diverse teams of experts. The final strategy is "Transparency and Accountability," which stresses the need for transparency in AI development and deployment processes to build trust and facilitate the identification of biases. This involves clear documentation of data sources, algorithmic design, and decision-making criteria. Implementing these strategies, it is possible to reduce the risk of biased outcomes and promote fairness in AI applications.

Figure 2: Strategies for Mitigation in AI systems

Privacy Concerns

The deployment of AI systems often requires the collection and analysis of large volumes of personal data. This raises significant privacy concerns, as individuals may be unaware of how their data is being used or may not have consented to its use in AI applications. The potential for misuse of personal data by AI systems, whether through data breaches or unauthorized surveillance, underscores the need for robust privacy protections. Ensuring data privacy involves implementing stringent data protection measures and fostering transparency in data collection and usage practices.

Data Collection and Consent AI systems rely heavily on data, which often includes sensitive personal information. This data collection can occur through various means, such as online activities, smart devices, and surveillance systems. Individuals may not always be aware of the extent to which their data is being collected or how it is being used, leading to concerns about consent and autonomy. Research indicates that many AI systems operate without explicit user consent, raising ethical and legal questions.

Risks of Data Breaches The centralized storage of vast amounts of personal data in AI systems makes them attractive targets for cyber-attacks. Data breaches can result in the unauthorized access, use, or disclosure of personal information, causing significant harm to individuals. High-profile data breaches, such as those involving major corporations and government databases, have highlighted the vulnerabilities in existing data protection frameworks [27].

Unauthorized Surveillance AI technologies, particularly those used in surveillance, pose significant privacy risks. Systems such as facial recognition and predictive policing can be deployed without adequate oversight, leading to invasive monitoring and tracking of individuals. This nauthorized surveillance can have chilling effects on personal freedoms and civil liberties, as individuals may alter their behavior due to the perception of being constantly watched [28].

Implementing these strategies, organizations can enhance data privacy and address the ethical challenges associated with AI technologies.

Strategies for Ensuring Data Privacy To address these privacy concerns, several strategies can be employed:

• Robust Data Protection Measures: Implementing advanced data encryption, anonymization techniques, and secure data storage solutions can help protect personal information from unauthorized access and breaches. These measures are critical in maintaining the integrity and confidentiality of personal data.

• Transparency in Data Practices: Organizations should be transparent about their data collection, usage, and sharing practices. Providing clear and accessible information to users about how their data is being used, and obtaining informed consent, is essential in building trust and ensuring compliance with privacy regulations.

• Regulatory Compliance: Adhering to data protection laws and regulations, such as the General Data Protection Regulation (GDPR) in the European Union, is crucial. These regulations provide guidelines on data processing, user consent, and individuals' rights, ensuring that AI systems operate within legal and ethical boundaries.

• Ethical AI Design: Designing AI systems with privacy in mind from the outset, often referred to as "privacy by design," involves integrating privacy considerations into the development process. This includes conducting privacy impact assessments and regularly reviewing and updating privacy practices.

Fig. 3 illustrates various methods to protect data privacy in AI systems. The central theme is "Strategies for Ensuring Data Privacy," from which four main strategies branch out. The first strategy is "Robust Data Protection Measures," emphasizing the implementation of strong security protocols to safeguard data from breaches and unauthorized access. The second strategy is "Transparency in Data Practices," which involves being open about how data is collected, used, and shared, thereby building trust with users and stakeholders. The third strategy is "Regulatory Compliance," ensuring that all data practices adhere to relevant laws and regulations to protect individuals' privacy rights. The final strategy is "Ethical AI Design," which advocates for designing AI systems with ethical considerations in mind, ensuring that data privacy is prioritized throughout the development and deployment process. This figure provides a clear visual representation of the fundamental strategies required to ensure data privacy in AI systems. Implementing these strategies, organizations can enhance data privacy and address the ethical challenges associated with AI technologies.

Figure 3: Strategies for Ensuring Data Privacy

Transparency and Accountability

AI systems are frequently described as "black boxes" due to their complex and opaque nature. This lack of transparency can make it difficult for users to understand how AI decisions are made, which can undermine trust in AI technologies. Moreover, when AI systems make errors or cause harm, determining accountability can be challenging. Establishing transparency involves developing explainable AI models that provide insights into their decisionmaking processes, while accountability requires clear frameworks for responsibility and redress in the event of adverse outcomes.

The Black Box Problem The "black box" nature of AI systems refers to the difficulty in understanding and interpreting the decision-making processes of complex algorithms, particularly deep learning models. These models operate with numerous parameters and layers of computation, making their inner workings opaque even to experts [29]. This opacity poses significant challenges for ensuring transparency and accountability, as stakeholders cannot easily trace or explain how specific decisions are reached.

Trust and User Understanding Transparency is critical for building trust in AI systems. When users cannot understand how an AI system arrives at its decisions, they may be reluctant to rely on or accept its outcomes. This is particularly problematic in high-stakes applications such as healthcare, finance, and criminal justice, where the consequences of AI decisions can be profound [17]. Enhancing transparency through explainable AI (XAI) techniques can help demystify these systems, providing users with clearer insights into how decisions are made and why.

Accountability in AI Systems Accountability in AI systems involves determining who is responsible when an AI system causes harm or makes an error. This is often complicated by the involvement of multiple parties in the development, deployment, and operation of AI systems, including developers, data scientists, and end-users [20]. Establishing clear accountability frameworks is essential for ensuring that appropriate actions can be taken in response to adverse outcomes and that responsible parties can be held liable.

Strategies for Enhancing Transparency and Accountability To address the challenges of transparency and accountability in AI systems, several strategies can be employed:

• Explainable AI (XAI) Models: Developing AI models that can provide clear and understandable explanations for their decisions is crucial. Techniques such as local interpretable model-agnostic explanations (LIME) and SHapley Additive exPlanations (SHAP) can help make AI systems more transparent by highlighting the factors that influence their decisions [22].

• Documentation and Auditing: Comprehensive documentation of AI systems, including data sources, algorithmic design, and decision-making processes, is essential for transparency. Regular audits and evaluations of AI systems can help ensure that they operate as intended and adhere to ethical standards [14].

• Clear Accountability Frameworks: Establishing clear frameworks that define the responsibilities of various stakeholders in the AI lifecycle is crucial for accountability. This includes setting out protocols for reporting and addressing errors, as well as mechanisms for redress in case of harm [21].

• Ethical and Regulatory Oversight: Implementing ethical guidelines and regulatory frameworks can help ensure that AI systems are developed and used responsibly. This involves collaboration between technologists, ethicists, policymakers, and the public to create standards that promote transparency and accountability [30].

Fig. 4 provides a visual representation of key strategies for enhancing transparency and accountability in AI systems. The central theme is "Strategies for Enhancing Transparency and Accountability," from which four main strategies branch out. The first strategy is "Explainable AI (XAI) Models," which emphasizes the development of AI models that can provide clear and understandable explanations for their decisions and actions. This helps users and stakeholders understand how decisions are made, thereby building trust in the AI system. The second strategy is "Documentation and Auditing," which involves maintaining detailed records of AI development and deployment processes. Regular audits of these records can help identify and address potential issues, ensuring that AI systems are used ethically and responsibly. The third strategy is "Clear Accountability Frameworks," which advocates for establishing clear frameworks that define who is responsible for the outcomes of AI systems. This includes setting guidelines for accountability at each stage of AI development and deployment to ensure that any issues can be addressed promptly and effectively. The final strategy is "Ethical and Regulatory Oversight," which stresses the importance of having ethical guidelines and regulatory bodies in place to oversee AI systems. This oversight helps ensure that AI technologies are developed and used in ways that are consistent with societal values and legal standards.

Adopting these strategies, organizations can enhance the transparency and accountability of their AI systems, fostering greater trust and ensuring responsible AI practices.

Figure 4: Strategies for Enhancing Transparency and Accountability

The Need for an Ethical Framework

Given these ethical challenges, there is a critical need for a comprehensive framework to guide the responsible development and deployment of AI in IT. Such a framework should be grounded in ethical principles that prioritize human rights, fairness, and societal well-being. It should also be adaptable to the rapidly changing technological landscape, incorporating input from a diverse range of stakeholders, including technologists, ethicists, policymakers, and the public.

Ethical Principles

A robust ethical framework for AI in IT must be founded on key ethical principles:

• Human Rights: AI systems should respect and uphold human rights, including privacy, freedom of expression, and nondiscrimination [31]. Ensuring that AI technologies do not infringe on these rights is paramount to maintaining public trust and protecting individuals.

• Fairness: Fairness involves ensuring that AI systems do not produce biased or unjust outcomes. This includes addressing both direct discrimination and disparate impacts on different demographic groups [32].

• Societal Well-being: AI should be developed and deployed with the broader societal impact in mind. This means promoting benefits that contribute to societal good and avoiding harms that can exacerbate social inequalities or disrupt communities.

Stakeholder Involvement

For an ethical framework to be effective, it must include input from a diverse range of stakeholders. This ensures that the perspectives and concerns of various groups are considered, leading to more holistic and equitable AI systems:

• Technologists: Engineers and developers who build AI systems need to understand and integrate ethical considerations into their design processes.

• Ethicists: Experts in ethics can provide critical insights into the moral implications of AI technologies and help shape guidelines that promote ethical practices.

• Policymakers: Government officials and regulators play a crucial role in creating and enforcing policies that ensure the ethical use of AI.

• Public: Engaging with the public is essential to understanding societal values and concerns, ensuring that AI systems align with the interests and needs of the broader community.

Purpose of the Framework

This paper seeks to address the ethical challenges associated with AI in IT by proposing a detailed ethical framework. Drawing on interdisciplinary research and practical case studies, the framework aims to provide actionable guidelines for mitigating biases, protecting privacy, enhancing transparency, and ensuring accountability in AI systems. By fostering ethical AI practices, the framework aspires to support the sustainable and equitable advancement of AI technologies, ultimately benefiting society as a whole.

Actionable Guidelines

The proposed ethical framework will include specific, actionable guidelines to help organizations develop and deploy AI systems responsibly:

• Mitigating Biases: Strategies for identifying and reducing biases in AI systems to promote fairness and equity.

• Protecting Privacy: Measures to safeguard personal data and ensure user consent, enhancing privacy protections.

• Enhancing Transparency: Approaches to developing explainable AI models and providing clear documentation to improve transparency.

• Ensuring Accountability: Establishing clear accountability frameworks to determine responsibility and provide redress in case of harm

Fig. 5 illustrates the proposed ethical framework designed to help organizations develop and deploy AI systems responsibly. The central theme is "Actionable Guidelines," from which four main strategies branch out. The first strategy is "Mitigating Biases," which focuses on implementing measures to reduce biases in AI systems. The second strategy is "Protecting Privacy," emphasizing the importance of safeguarding personal information throughout AI processes. The third strategy is "Enhancing Transparency," which involves making AI development and deployment processes clear and understandable to build trust. The final strategy is "Ensuring Accountability," which stresses the importance of holding organizations and individuals responsible for the outcomes and impacts of AI systems. This figure provides a clear visual representation of the key guidelines to ensure ethical AI development and deployment. By implementing these guidelines, organizations can address the ethical challenges of AI and promote practices that are in line with societal values and expectations.

Figure 5: Ethical framework actionable Guidelines

Case Studies

Bias in AI Algorithms

AI algorithms are often criticized for perpetuating biases present in the training data. This case study examines instances of algorithmic bias in IT applications, such as hiring processes and loan approvals, and discusses strategies for mitigating these biases.

Hiring Processes One notable case of bias in AI-driven hiring processes involves Amazon's AI recruitment tool. In 2018, it was revealed that Amazon had developed an AI tool to automate the hiring process, but the system was found to be biased against women. The tool was trained on resumes submitted over a ten-year period, most of which came from men, and it penalized resumes that included the word "women's" and downgraded graduates of all-women's colleges. As a result, the AI system favored male candidates over female ones [32]. Strategies for Mitigation:

• Diverse Training Data: Companies can ensure training datasets are representative of all demographic groups by actively including data from underrepresented groups.

• Bias Audits: Regular audits can help identify and address any discriminatory patterns in AI systems [19].

• Algorithmic Fairness Techniques: Techniques such as reweighting, fairness constraints, and adversarial debiasing can help create fairer AI models [26].

Loan Approvals In the financial sector, an investigation into algorithmic bias was conducted by the University of California, Berkeley, which examined mortgage lending decisions made by algorithmic systems. The study found that both traditional and algorithmic lending practices charged African American and Hispanic borrowers higher interest rates than white borrowers with similar credit profiles. This discrepancy highlighted the presence of racial bias in AI-driven loan approval systems [33]. Strategies for Mitigation:

• Fair Lending Laws Compliance: Ensuring AI systems comply with fair lending laws and regulations that prohibit discriminatory practices.

• Transparent Criteria: Clearly defining and disclosing the criteria used by AI algorithms to make lending decisions can help reduce biases and increase trust [25].

• Continuous Monitoring: Implementing continuous monitoring systems to regularly check for and rectify biases in lending decisions.

Predictive Policing Another significant case of bias in AI is related to predictive policing. In 2016, ProPublica published an investigation into COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), a risk assessment tool used in the US criminal justice system. The investigation found that COMPAS was biased against African American defendants, who were disproportionately labeled as high risk for future crime compared to white defendants, despite similar histories [34]. Strategies for Mitigation:

• Bias Detection and Correction: Developing techniques to detect and correct biases in predictive models.

• Ethical Review and Oversight: Establishing ethical review boards to oversee the deployment and use of predictive policing tools.

• Community Involvement: Engaging with communities to understand the impact of these tools and ensure they are used fairly and justly

Privacy Concerns

AI systems often require vast amounts of data, raising significant privacy concerns. The next case studies explore the ethical implications of data collection and usage in AI-driven IT systems, proposing measures to enhance data privacy and user consent.

Facebook-Cambridge Analytica Scandal

One of the most prominent cases highlighting privacy concerns in AI systems is the Facebook-Cambridge Analytica scandal. In 2018, it was revealed that Cambridge Analytica, a political consulting firm, had harvested the personal data of millions of Facebook users without their consent. This data was used to create psychographic profiles and target individuals with personalized political advertisements during the 2016 US presidential election [35].

Ethical Implications:

• Unauthorized Data Collection: The scandal involved the collection of data from users who had not consented to its use for political profiling and advertisement.

• Manipulation and Influence: The use of personal data to influence political opinions and behaviors raised significant ethical concerns about manipulation and the undermining of democratic processes. Measures to Enhance Data Privacy and User Consent:

• Stronger Data Protection Regulations: The introduction of the General Data Protection Regulation (GDPR) in the European Union has set a higher standard for data privacy, requiring explicit consent from users for data collection and use.

• Transparency and User Control: Companies should provide clear information about data collection practices and give users control over their personal data, including the ability to opt-out and delete their data.

Google's Project Nightingale In 2019, Google faced scrutiny over its Project Nightingale, a partnership with Ascension, a large healthcare provider. The project involved the transfer of millions of patients' medical records to Google without the patients' knowledge or consent. The data was intended to develop AI-driven healthcare solutions, but the lack of transparency and consent raised significant privacy concerns [36].

Ethical Implications:

• Lack of Consent: Patients were not informed that their medical records were being shared with Google, violating their right to privacy and informed consent.

• Data Security Risks: The centralization of sensitive health data posed risks of data breaches and unauthorized access. Measures to Enhance Data Privacy and User Consent:

• Informed Consent: Ensuring that patients are fully informed about how their data will be used and obtaining explicit consent before sharing their medical records.

• Data Anonymization: Implementing robust data anonymization techniques to protect patient identities and reduce the risk of reidentification.

Apple's Differential Privacy Apple has implemented differential privacy techniques to enhance user privacy while collecting data to improve its services. Differential privacy adds statistical noise to the data, making it difficult to identify individual users. This approach allows Apple to gather useful insights while protecting user privacy [37].

Ethical Implications:

• Balancing Utility and Privacy: Differential privacy aims to strike a balance between data utility and user privacy, ensuring that personal information remains protected while still providing valuable insights.

• Transparency in Data Practices: Apple's implementation of differential privacy demonstrates a commitment to transparency and user trust. Measures to Enhance Data Privacy and User Consent:

• Privacy-Preserving Technologies: Adopting privacy-preserving technologies such as differential privacy can help protect user data while still enabling data-driven innovations.

• Clear Communication: Providing users with clear explanations of how their data is protected and how differential privacy works can enhance trust and consent.

Transparency and Accountability

Transparency and accountability are critical for building trust in AI systems. This case study investigates the challenges of achieving transparency in AI algorithms and outlines best practices for ensuring accountability in AI development and deployment.

COMPAS Recidivism Algorithm The COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) recidivism algorithm is a widely discussed case regarding transparency and accountability in AI. COMPAS is used by courts in the United States to predict the likelihood of a defendant reoffending. However, a 2016 investigation by ProPublica found that the algorithm was biased against African American defendants, who were falsely flagged as future criminals at almost twice the rate of white defendants [34].

Challenges:

• Opaque Decision-Making: The proprietary nature of the COMPAS algorithm meant that its decision-making process was not transparent to the public or even to the judges using it.

• Lack of Accountability: When the algorithm's predictions were wrong, there was no clear accountability mechanism for addressing the errors and their impacts on defendants' lives. Best Practices for Transparency and Accountability:

• Explainable AI: Developing AI models that provide clear, understandable explanations for their decisions can help improve transparency.

• Regular Audits: Conducting regular audits of AI systems to identify biases and errors can ensure ongoing accountability.

• Open Algorithms: Where possible, using open-source algorithms can enhance transparency and allow for public scrutiny.

Google's Search Algorithm Google's search algorithm has faced scrutiny regarding its transparency and accountability, particularly with its influence on information accessibility and business visibility. The algorithm determines the ranking of search results, impacting how information is presented to users. Concerns have been raised about the lack of transparency in how these rankings are determined and the potential for bias [38].

Challenges:

• Algorithmic Opacity: The complexity and proprietary nature of Google's search algorithm mean that the criteria for ranking results are not fully transparent.

• Accountability for Bias: The lack of transparency makes it difficult to hold Google accountable for biases that might arise in search results, potentially impacting public opinion and market competition. Best Practices for Transparency and Accountability:

• Transparent Guidelines: Providing more detailed guidelines on how search rankings are determined can help users understand the process.

• Third-Party Audits: Allowing third-party audits of search algorithms can help ensure they are fair and unbiased.

• User Feedback: Incorporating user feedback mechanisms to identify and address potential biases or errors in search results.

Microsoft's Tay Chatbot Microsoft's Tay chatbot, an AI designed to engage with users on Twitter, quickly became a case study in transparency and accountability after it started producing inappropriate and offensive tweets. Within 24 hours of its launch in 2016, Tay was manipulated by users to make racist and misogynistic statements, leading Microsoft to shut it down [39].

Challenges:

• Transparency in AI Behavior: The rapid and unexpected behavior of Tay highlighted the challenges in predicting and controlling AI interactions in public domains.

• Lack of Accountability: The incident raised questions about accountability in AI development, particularly in terms of prelaunch testing and post-launch monitoring.

• Best Practices for Transparency and Accountability:

• Robust Pre-Deployment Testing: Thorough testing of AI systems in controlled environments can help identify potential issues before public release.

• Real-Time Monitoring: Implementing real-time monitoring and intervention mechanisms can help mitigate unexpected behaviors.

• Clear Usage Policies: Establishing clear usage policies and guidelines for interaction with AI systems can help manage user behavior and expectations.

Proposed Ethical Framework

Building on the literature review and case studies, this section proposes a comprehensive ethical framework for AI in IT. The framework includes guidelines for:

Bias Mitigation

Implementing strategies to identify and reduce biases in AI algorithms is crucial for ensuring fairness and equity in AI systems. For example, Barocas, Hardt, and Narayanan emphasize the importance of using diverse and representative training datasets to mitigate biases. Additionally, Raghavan et al. suggest regular audits and the application of fairness constraints and adversarial debiasing techniques to further address algorithmic biases.

Privacy Protection

Ensuring robust data privacy measures and obtaining informed consent from users is essential to protect individuals' rights and maintain trust in AI systems. Tene and Polonetsky highlight the importance of transparency in data collection practices and the implementation of user control mechanisms [15]. Similarly, Rubinstein and Good discuss the limitations of compliance with data protection principles and advocate for stronger privacy measures and clearer communication with users [40].

Transparency

Developing clear documentation and communication strategies to enhance the transparency of AI systems is necessary for building trust and facilitating understanding of AI processes. Doshi-Velez and Kim argue for the development of explainable AI models that provide clear, understandable explanations for their decisions [17]. Ribeiro, Singh, and Guestrin demonstrate the effectiveness of techniques such as Local Interpretable Model-agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP) in making AI systems more transparent [22].

Accountability

Establishing mechanisms for accountability, including regular audits and the inclusion of diverse stakeholders in the development process, is vital for ensuring responsible AI development and deployment. Wachter, Mittelstadt, and Floridi discuss the challenges of ensuring accountability in automated decision-making and emphasize the need for clear guidelines and mechanisms for redress [20]. Diakopoulos underscores the importance of accountability frameworks that define the responsibilities of various stakeholders and provide processes for addressing harm caused by AI systems [21]. Fig. 6 outlines four key areas for addressing ethical concerns in AI systems. At the center is the main topic, "Proposed Ethical Framework," from which four primary strategies branch out. The first strategy is "Bias Mitigation," which focuses on methods to identify and reduce biases within AI systems. The second strategy is "Privacy Protection," emphasizing the importance of safeguarding personal data and ensuring privacy in AI applications. The third strategy is "Transparency," which involves making AI processes and decisions clear and understandable to build trust and facilitate accountability. The final strategy is "Accountability," which ensures that individuals and organizations are held responsible for their AI systems' actions and decisions. This figure visually represents the critical components of a comprehensive ethical framework for AI.

Figure 6: Proposed Ethical Framework

Conclusion

As artificial intelligence (AI) continues to transform the information technology (IT) sector, addressing the associated ethical challenges is paramount to ensuring that these technologies benefit society as a whole. This paper has highlighted the importance of ethical considerations in AI development, particularly focusing on issues of bias, privacy, transparency, and accountability. By examining historical contexts, current trends, and practical case studies, we have demonstrated the multifaceted nature of these ethical challenges and the necessity for a robust ethical framework.

The proposed ethical framework provides actionable guidelines to mitigate biases, protect privacy, enhance transparency, and ensure accountability in AI systems. These guidelines emphasize the importance of using diverse and representative training datasets, implementing robust data protection measures, developing explainable AI models, and establishing clear accountability frameworks. By adopting these strategies, organizations can create AI systems that are not only technically advanced but also ethically sound.

The case studies explored in this paper, such as the biases found in hiring processes, loan approvals, and predictive policing, illustrate the real-world implications of ethical lapses in AI systems. Privacy concerns, exemplified by incidents like the Facebook-Cambridge Analytica scandal and Google's Project Nightingale, underscore the need for stringent data protection measures and transparent data practices. Additionally, challenges related to transparency and accountability in AI systems, as seen in the COMPAS recidivism algorithm and Google's search algorithm, highlight the necessity for explainable AI and robust oversight mechanisms.

Addressing these challenges requires a collaborative effort involving technologists, ethicists, policymakers, and the public. By incorporating diverse perspectives and engaging with a broad range of stakeholders, we can ensure that AI technologies are developed and deployed in ways that align with societal values and ethical standards.

Future research should focus on refining the proposed ethical framework and exploring its application in various contexts to promote sustainable and equitable technological progress. Continued dialogue and collaboration among stakeholders will be crucial in adapting the framework to the rapidly evolving landscape of AI. Ultimately, by fostering ethical AI practices, we can harness the full potential of AI technologies to drive innovation and improve societal well-being, while safeguarding fundamental human rights and values [41].

References

1. McCarthy, J., Minsky, M. L., Rochester, N., & Shannon, C. E. (2006). A proposal for the dartmouth summer research project on artificial intelligence, august 31, 1955. AI magazine, 27(4), 12-12.

2. Newell, A., & Simon, H. (1956). The logic theory machine--A complex information processing system. IRE Transactions on information theory, 2(3), 61-79.

3. Newell, A., & Simon, H. A. (1961). GPS, a program that simulates human thought.

4. Crevier, D. (1993). AI: the tumultuous history of the search for artificial intelligence. Basic Books, Inc..

5. Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. nature, 323(6088), 533-536.

6. Feigenbaum, E. A. (1992). The Fifth Generation: Artificial Intelligence and Japan's Computer Challenge to the World. Addison-Wesley.

7. Chen, M., Mao, S., & Liu, Y. (2014). Big data: A survey. Mobile networks and applications, 19, 171-209.

8. LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. nature, 521(7553), 436-444.

9. Barocas, S., & Selbst, A. D. (2016). Big data's disparate impact. Calif. L. Rev., 104, 671.

10. Crawford, K., & Calo, R. (2016). There is a blind spot in AI research. Nature, 538(7625), 311-313.

11. Buczak, A. L., & Guven, E. (2015). A survey of data mining and machine learning methods for cyber security intrusion detection. IEEE Communications surveys & tutorials, 18(2), 1153-1176.

12. Brundage, M., Avin, S., Clark, J., Toner, H., Eckersley, P., Garfinkel, B., ... & Amodei, D. (2018). The malicious use of artificial intelligence: Forecasting, prevention, and mitigation. arXiv preprint arXiv:1802.07228.

13. Dale, R. (2016). The return of the chatbots. Natural language engineering, 22(5), 811-817.

14. Binns, R., Van Kleek, M., Veale, M., Lyngs, U., Zhao, J., & Shadbolt, N. (2018, April). 'It's Reducing a Human Being to a Percentage' Perceptions of Justice in Algorithmic Decisions. In Proceedings of the 2018 Chi conference on human factors in computing systems (pp. 1-14).

15. Tene, O., & Polonetsky, J. (2012). Big data for all: Privacy and user control in the age of analytics. Nw. J. Tech. & Intell. Prop., 11, 239.

16. O'neil, C. (2017). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown.

17. Doshi-Velez, F., & Kim, B. (2017). Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608.

18. Barocas, S., Hardt, M., & Narayanan, A. (2023). Fairness and machine learning: Limitations and opportunities. MIT press.

19. Raghavan, M., Barocas, S., Kleinberg, J., & Levy, K. (2020, January). Mitigating bias in algorithmic hiring: Evaluating claims and practices. In Proceedings of the 2020 conference on fairness, accountability, and transparency (pp. 469-481).

20. Wachter, S., Mittelstadt, B., & Floridi, L. (2017). Why a right to explanation of automated decision-making does not exist in the general data protection regulation. International data privacy law, 7(2), 76-99.

21. Diakopoulos, N. (2016). Accountability in algorithmic decision making. Communications of the ACM, 59(2), 56-62.

22. Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). " Why should i trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).

23. European Commission. (2019). Ethics Guidelines for Trustworthy AI.

24. IEEE. (2019). Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems.

25. Hurley, M., & Adebayo, J. (2016). Credit scoring in the era of big data. Yale JL & Tech., 18, 148.

26. Kamiran, F., Calders, T., & Pechenizkiy, M. (2010, December). Discrimination aware decision tree learning. In 2010 IEEE international conference on data mining (pp. 869-874). IEEE.

27. Landwehr, C. E. (2020). Privacy and Security in the Era of Big Data and Artificial Intelligence. Communications of the ACM, 63(8), 5-5.

28. Kang, J. (2018). The Algorithmic Leviathan: Arbitrariness, Fairness, and Opportunity in Algorithmic Decision Making. California Law Review, 106(6), 1115-1160.

29. Lipton, Z. C. (2018). The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue, 16(3), 31-57.

30. Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature machine intelligence, 1(9), 389-399.

31. Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., ... & Vayena, E. (2018). AI4People—an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds and machines, 28, 689-707.

32. Dastin, J. (2022). Amazon scraps secret AI recruiting tool that showed bias against women. In Ethics of data and analytics (pp. 296-299). Auerbach Publications.

33. Bartlett, R., Morse, A., Stanton, R., & Wallace, N. (2022). Consumer-lending discrimination in the FinTech era. Journal of Financial Economics, 143(1), 30-56.

34. Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2022). Machine bias. In Ethics of data and analytics (pp. 254-264). Auerbach Publications.

35. Cadwalladr, C., & Graham-Harrison, E. (2018). Revealed: 50 million Facebook profiles harvested for Cambridge Analytica in major data breach. The guardian, 17(1), 22.

36. Copeland, R. (2019). Google’s ‘Project Nightingale’ Gathers Personal Health Data on Millions of Americans. The Wall Street Journal.

37. Apple. (2017). Differential Privacy.

38. Introna, L. D., & Nissenbaum, H. (2000). Shaping the Web: Why the politics of search engines matters. The information society, 16(3), 169-185.

39. Neff, G. (2016). Talking to bots: Symbiotic agency and the case of Tay. International Journal of Communication.

40. Rubinstein, I. S., & Good, N. (2020). The Trouble with Big Data: Data Protection Principles and the Limits of Compliance. Information & Communications Technology Law, 29(2), 198- 223.

41. Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 2053951716679679.